👉 Settings for your compute queues

A public compute grid

To solve the problem of “where does the compute run”, we have built a open-source public compute grid.

It’s simply a public work queue, where clients submit docker container jobs, and workers pick up the jobs and run them. Queues are set in the URLs:

[https://container.mtfm.io/#?queue=some-queue](https://container.mtfm.io/#?queue=some-queue)

You can submit jobs via POST request, but you can also define and run jobs via URLs:

https://container.mtfm.io/#?… (the link is long!)

This means that the URL both defines the code AND where and how to run the code, so it’s a self-contained environment for safe code execution.

Containers standardize otherwise complex compute environments. By running a worker agent on your machine(s), you can then power container metaframes, each passing data as inputs and outputs to other metaframes in your workflow.

Run docker containers in the browser

Metapage workflows run in the browser. However many scientific workflows need to run languages like python or R, which do not (yet) run natively in the browser. The container metaframe (container.mtfm.io) solves this problem by providing public compute queues (a “grid”) that metapage workflows can “plug” into like an electrical grid.

You can provide your own computer(s) to your personal grid (queue), or plug a cluster into the grid so your entire team can share the same resources (coming soon). When someone else runs your workflows, they will automatically run it on their own grid, or their own computer.

We abstract away where compute is done, so you can focus on what matters.

Quickstart: Run a worker

Step 1: run a worker on your computer(s)

To run containers, you need to run a worker:

| run in local mode | Start here. All data is local to this computer, never to cloud storage. Also quicker and simpler. Desktop only, mobile/tablet not yet supported. |

| run in remote mode | If you want access to scaleable compute resources, cloud storage, or viewing workflows on phones and tablets |

Soon on our roadmap are cloud compute resources on demand, with zero configuration. Until then, you must run your own workers.

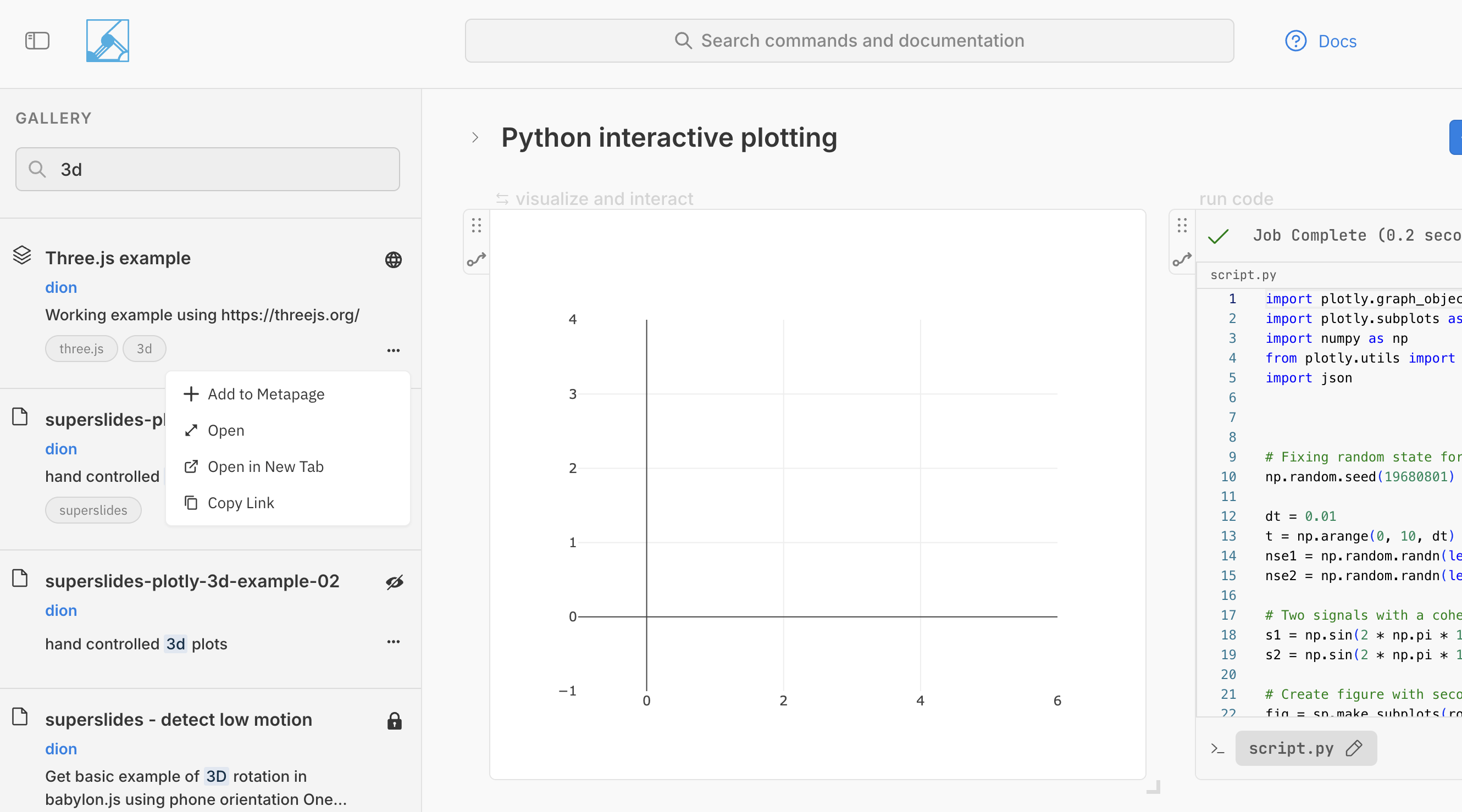

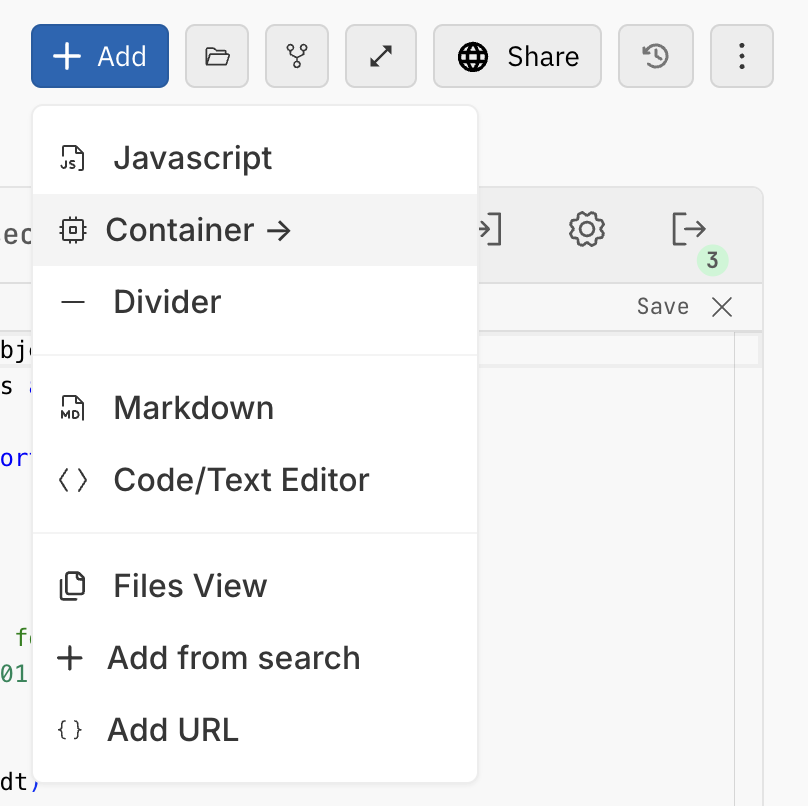

Step 2: Add a container

Either add a container from searching or add directly.

Via search:

Via [+ Add] a “Docker Container”:

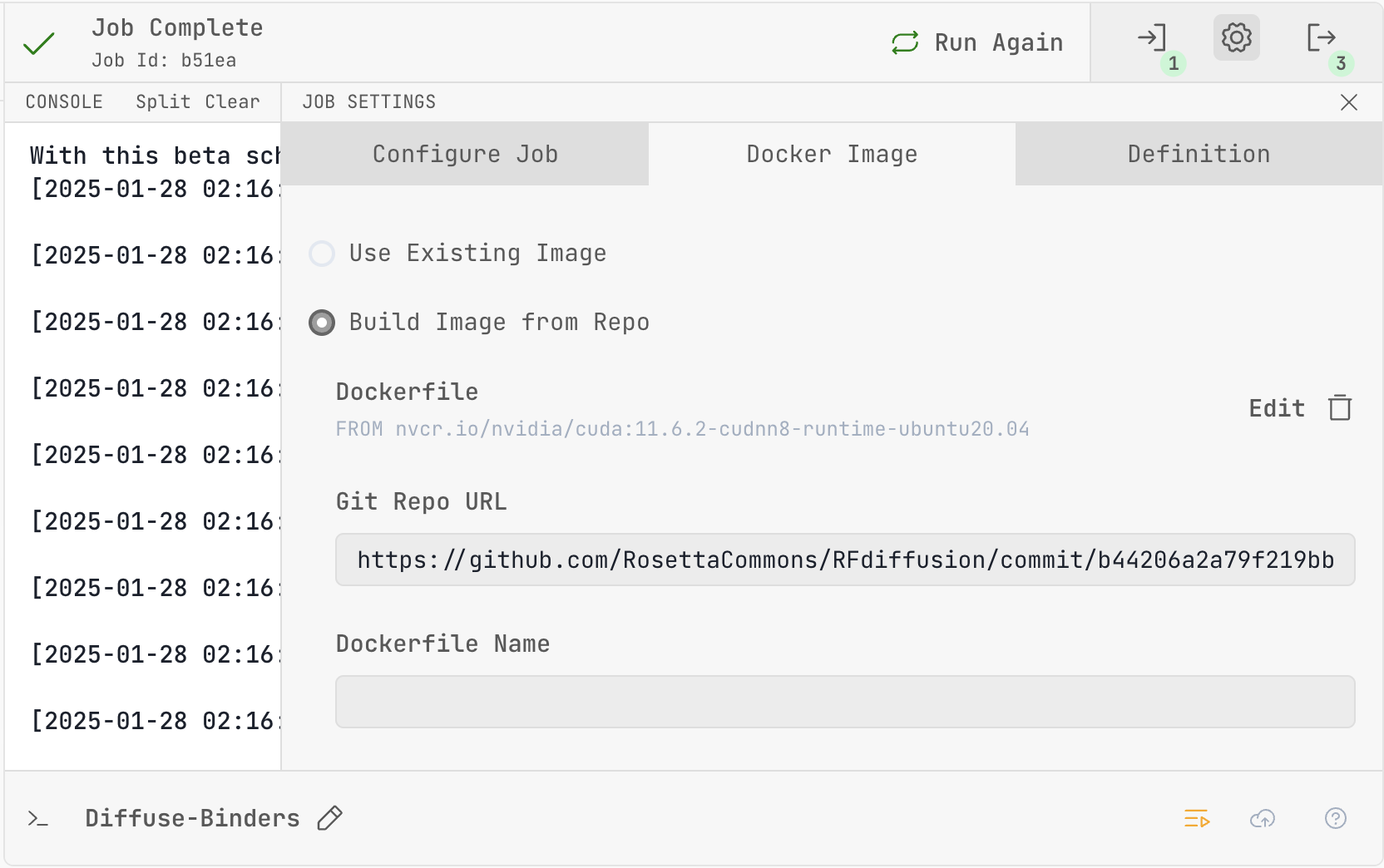

Step 3: Configure and run the container

Configure your docker job, then run

For private github repos, add the token as part of the URL:

https://<token>@github.com/username/repository

WARNING: do not share this workflow. Adding private credentials safely is on our roadmap: https://github.com/metapages/docs.metapage.io/issues/18

Docker environment

System Environment Variables

These env vars are always set in your container:

| environment variable | description |

|---|---|

JOB_INPUTS | Default: /inputs. The directory where job input files are copied. |

JOB_OUTPUTS | Default: /outputs. The directory where job output files will be copied when the job finishes successfully. |

JOB_CACHE | Default: /job-cache. Shared directory for caching e.g. large models. |

JOB_ID | sha256 of the definition + inputs |

JOB_URL_PREFIX | https://container.mtfm.io/j/<jobId> |

JOB_OUTPUTS_URL_PREFIX | https://container.mtfm.io/j/<jobId>/outputs/ |

JOB_INPUTS_URL_PREFIX | https://container.mtfm.io/j/<jobId>/inputs/ |

CUDA_VISIBLE_DEVICES | index of assigned GPU, if the worker has GPUs assigned and the job requests one |

The public URL of e.g. job outputs is

https://container.mtfm.io/j/<jobId>/outputs/<file name>

or

https://container.mtfm.io/q/<queueId>/j/<jobId>/outputs/<file name>

User Environment Variables

All search params in the URL (…?<key>=<value>) are injected into the container as env vars:

| name | value |

|---|---|

<key> | <value> |

Inputs, outputs, and caching

- Inputs are copied into the directory

/inputs. The env var$JOB_INPUTSis set to this directory. - Copy output files to

/outputs. The env var$JOB_OUTPUTSis set to this directory.

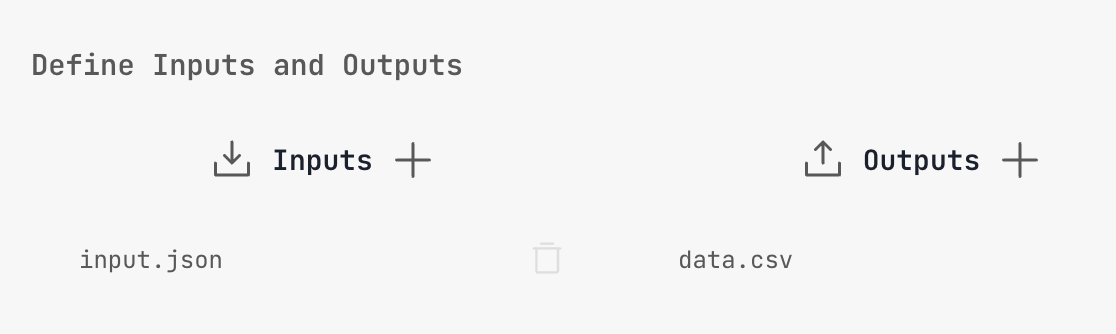

Define Inputs and Outputs

In Settings / Definition you can define inputs and outputs. This doesn't change how the code runs, but it helps to quickly connect other metaframes in metapages.

In this example, we defined an input: input.json and an output data.csv:

You will see these inputs and outputs automatically in the metapage editor.

Directory for caching data and large ML models

The directory defined in the env var $JOB_CACHE (defaults to /job-cache) is shared between all jobs running on a single host. Use this location to store e.g. large data sets and AI models.

The cache is not shared between worker instances, only between jobs running on a single instance or computer. It comes with no guarantees on persistence.

Description

container.mtfm.io runs docker containers on workers. It is currently in beta.

- Run any publicly available docker image:

Python,R,C++,Java, ... anything. - Bring your own workers

- Currently individual machines are supported, but kubernetes and nomad support coming soon

- Your queue is simply an unguessable hash. Do not share it without consideration.

Use cases:

- machine learning pipelines

- data analysis workflows

Any time the inputs change (and on start) the configured docker container is run:

/inputsis the location where inputs are copied as files/outputs: any files here when the container exits are passed on as metaframe outputs

Versioned. Reproducible. No client install requirements, as long as you have at least one worker running somewhere, you can run any programming language.

Example URL

Click here to run a python command in a container

Github repository

https://github.com/metapages/compute-queues